Soldato

3080 or go home

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Easy for you to say. You have LoadsaMoney.

Haha

I imagine if the next gen consoles let people use M+K that would change

A ps5 currently has the same amount of cores as a 2070 and less than a 5700XT.

So yes even with some rdna2 improvement, it’s likely a ps5 will be 2070/5700XT levels of performance which by next gen will probably be even less than a 3060. 3070 likely being 2080Ti levels.

Same story with the XSX even though that has nearly 50% more shaders than the ps5.

If I didn't do the odd bit of video editing and work at home then console with M+K would be more suitable, right up until the point I need to use an unusual peripheral like HOTAS.

Consoles are closed platforms, as long as you don't need to pop out of their approved hardware and software lists you are golden, I would though.

He is quite spot on. The ps5 gpu had to be heavily overclocked to reach the tflops of a 5700xt. The xbox series x is about 10-15% more powerful which brings it closer to a 2070 super. This will be mid range level of performance once rdna 2 and ampere gpus are out which has usually been the case when next gen consoles come out. They become outdated very quickly.No way. The PS5 and Xbox series X are going to be faster than that. If the consoles are only that level of performance that means no uptake because of the change in process and no uptake because of RDNA 2. AMD are saying that it's an up to 50% improvement in performance per watt. Even if they only get half that it will still be around 2080 levels of performance.

Yeah a console is no good to me because I can't use it to work from home or edit videosIf I didn't do the odd bit of video editing and work at home then console with M+K would be more suitable, right up until the point I need to use an unusual peripheral like HOTAS.

Consoles are closed platforms, as long as you don't need to pop out of their approved hardware and software lists you are golden, I would though.

. I can't put frozen fish in the toaster either.

. I can't put frozen fish in the toaster either.Tflops are completely meaningless for gaming.

A vega56 has more Tflops than my 5700XT and yet its 40% faster in games.

The new XBox GPU is around RTX 2080 or more.

He is quite spot on. The ps5 gpu had to be heavily overclocked to reach the tflops of a 5700xt. The xbox series x is about 10-15% more powerful which brings it closer to a 2070 super. This will be mid range level of performance once rdna 2 and ampere gpus are out which has usually been the case when next gen consoles come out. They become outdated very quickly.

Yeah a console is no good to me because I can't use it to work from home or edit videos. I can't put frozen fish in the toaster either.

No way. The PS5 and Xbox series X are going to be faster than that. If the consoles are only that level of performance that means no uptake because of the change in process and no uptake because of RDNA 2. AMD are saying that it's an up to 50% improvement in performance per watt. Even if they only get half that it will still be around 2080 levels of performance.

Console peasantLooks at sig. Who ya callin a peasant? I don’t see no Titan RTX, 3950X or OLED in your sig!

This will be mid range level of performance once rdna 2 and ampere gpus are out which has usually been the case when next gen consoles come out. They become outdated very quickly.

Performance per watt of what exactly is the question. That certainly won’t be 50% more performance per shader just more efficient.

Sure there will be some improvement but it won’t be anywhere near 50% in terms of performance increase. RDNA2’s big thing will be RTX.

Still on a bad day the 2080Ti should = 3070. If ampere gets a big uplift going to 7nm which it should the Ti could even become 3060 level.

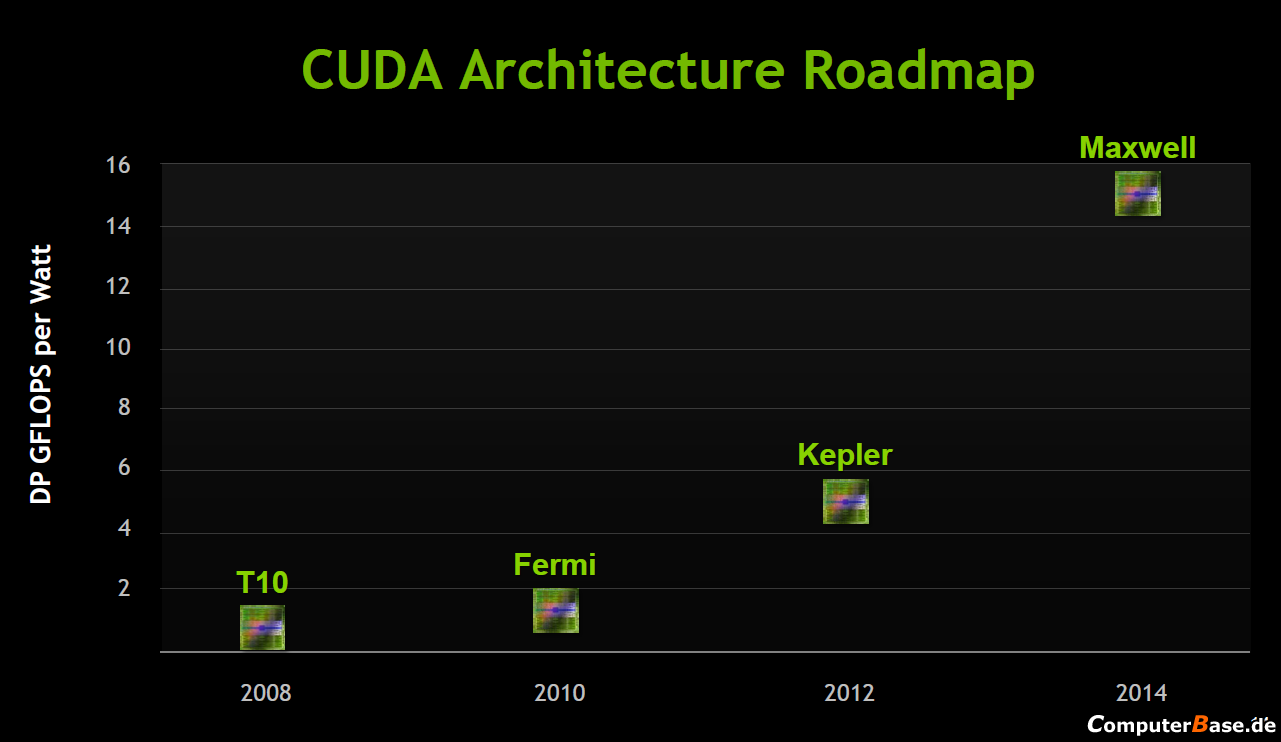

it's important for people to realise that Performance per Watt is a bit of a bullshat measurement that on its own doesn't actually mean anything. Who stared with it first it, it was AMD right looking for a new way to market its products?

it's important for people to realise that Performance per Watt is a bit of a bullshat measurement that on its own doesn't actually mean anything. Who stared with it first it, it was AMD right looking for a new way to market its products?

The main problem with that statement "50% more performance per watt" is that you don't know what wattage they are talking about - the curve is not flat and never will be - performance might be 50% improves at 20w but only 20% improved at 400w - if that's the case, is 20w enough for your gpu? No you want 200w, ok so maybe it's not actually 50% improved - who knows cause the marketing team at AMD won't tell you.

Tflops are completely meaningless for gaming.

A vega56 has more Tflops than my 5700XT and yet its 40% faster in games.

The new XBox GPU is around RTX 2080 or more.