-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA ‘Ampere’ 8nm Graphics Cards

- Thread starter LoadsaMoney

- Start date

More options

Thread starter's postsDon't bother. It was a @Poneros speciallink to the 3070 result? I can't see any posts in the last few pages mention the 3070 I'm not sure what you're talking about

Waiting for the punchlineReally glad I 'invested' in a 2080TI 20 months ago at £1299. Really glad....really really glad.

Oh wait...

...and sold it for £850 last month

Haha nah. Lian Li O-11 Air.It’s not one of these, is it?

https://www.gamersnexus.net/hwreviews/3612-abkoncore-ramesses-780-case-review-really-bad

I buy high sell low, I know things.Waiting for the punchline

...and sold it for £850 last month

It looks like the 3080 "chugs" Doom.

Didn't say it would go over 10 GB.

Honestly it's the thing holding me back with this all is the whole PCIe4. I really don't want to fork out buying a whole new rig if it's going to be an issue in a years time because it doesn't have PCIe4 or has some kind of weird compatability issue. I really don't want to hold out until middle of next year though especially with Cyberpunk around the corner and I also don't want to go AMD due to having issues with them many moons ago.

You're wise to be concerned, these companies have a habit of changing things last minute. Just because LGA1200 is capable of PCIE-V4, doens't mean each manufacturer implemented it properly.

Far safer to wait for LGA1700 (next year), which will feature DDR5, USB4, and PCIE-V4, and possibly V5! AM5 from AMD should also have the same features.

Honestly it's the thing holding me back with this all is the whole PCIe4. I really don't want to fork out buying a whole new rig if it's going to be an issue in a years time because it doesn't have PCIe4 or has some kind of weird compatability issue. I really don't want to hold out until middle of next year though especially with Cyberpunk around the corner and I also don't want to go AMD due to having issues with them many moons ago.

Then go decent Z490 board with a 10700K or 10600K now then just swap the CPU out for Rocket Lake when it arrives.

You're wise to be concerned, these companies have a habit of changing things last minute. Just because LGA1200 is capable of PCIE-V4, doens't mean each manufacturer implemented it properly.

Far safer to wait for LGA1700 (next year), which will feature DDR5, USB4, and PCIE-V4, and possibly V5! AM5 from AMD should also have the same features.

LGA1700 is not next year, it's 2022.

Soldato

- Joined

- 30 Jul 2012

- Posts

- 2,773

Don't be a plonker, buy ryzen if you want pci 4. Having issues with tech is normal, have had issues with Nvidia and AMD, should I just give up?Honestly it's the thing holding me back with this all is the whole PCIe4. I really don't want to fork out buying a whole new rig if it's going to be an issue in a years time because it doesn't have PCIe4 or has some kind of weird compatability issue. I really don't want to hold out until middle of next year though especially with Cyberpunk around the corner and I also don't want to go AMD due to having issues with them many moons ago.

I did a psu calculator with my 3700x 2 nvke drives, 2 3.5inch hdd, 6 fans, keyboard, mouse and external drive. And I have 110w headroom even at max load.im going for 3080

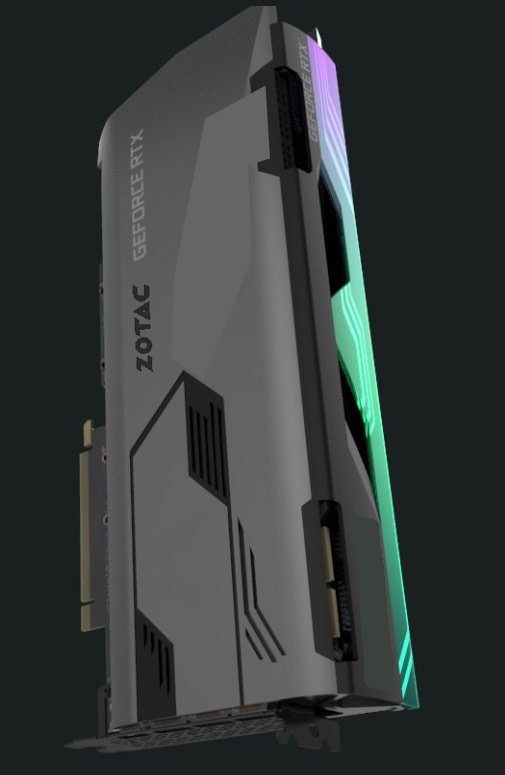

Zotac card or FE card, prefer the FE design but also prefer the 5 year warranty on the Zotac

Just hope my 650w evga supernova 2 will run it with a 3700x

My psu is 650w 80rated gold. With probably 17 hours gaming use in a year. Unless yours is old I wouldn't worry.

I even ordered a psu then cancelled this morning with confidence.

Associate

Exactly that.True. I’d like to go 4K, but I’m not paying nearly a grand for a 4K 27inch display that does 144Hz. Once the monitors come down to reasonable prices, I’ll make the jump, till then, I’m sticking with 1440p 144Hz.

Associate

Can you explain this to me please. As I have never used it before and it did occur to me today that with a 3080 at 1440p@144hz I could upscale my image for better IQ.

But I don't know if you set say the res to 4k in a game and just game as normal on my 1440p panel or do I have to do something specific in the Nvidia control panel/Geforce Experience to make it work?

Turn on DSR on your NVidia control panel and get a virtual resolution x2 or x3 etc the native resolution of your monitor. The GPU renders at that new resolution then down scales back to your native monitor resolution. You end up with much sharper scenes with much less need for AA which tries to fix the problem backwards (a band aid). In fact turning off AA and using DSR is the way to go. The higher the DSR multiplier the better but you need horsepower. Forget DSR for desktop work it’s not good for that.

Soldato

Does anyone know the names of the reviewers who were featured in Nvidia's launch when they witnessed the 3090 at 8k? Ive been trying to find them on youtube but cant.

Associate

Would you not want to wait for the zotac amp though?My preference is Zotac for the warranty but it will come down to whichever card I can get a waterblock that fits it quickly. That would now appear to mean it wont be the founders edition as nobody is making waterblocks for them as they are not "reference" this generation which is a shame,.

Associate

- Joined

- 4 Jul 2016

- Posts

- 275

I did a psu calculator with my 3700x 2 nvke drives, 2 3.5inch hdd, 6 fans, keyboard, mouse and external drive. And I have 110w headroom even at max load.

My psu is 650w 80rated gold. With probably 17 hours gaming use in a year. Unless yours is old I wouldn't worry.

I even ordered a psu then cancelled this morning with confidence.

Good lad