Associate

- Joined

- 8 May 2014

- Posts

- 96

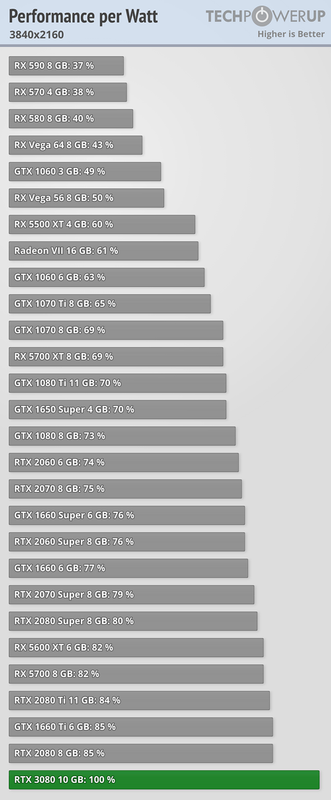

I think you've completely missed the point for the criticism which was more to do with the insane power and heat for the size of the die and the performance lets not get too fanboyish here now.

I dont think anyone should really care about power consumption providing the performance is good enough I mean you can run the 3080 on a 650w power supply which isn't a issue for most people at all unless theres a underlying agenda which seems to be the case.

I'm giving up. This is bizarre. Power consumption = Heat. There's nothing more to say.

I think we just wait and see what happens and see what the actual mainstream users think... not after a day but after a month or two. And I predict they won't be overjoyed.

BTW - a 650w Power Supply better not be some cheap ****** clone - and be at least Bronze, preferably higher. Even then people are going to experience problems - especially if they have latest Intel Processors.