Caporegime

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Navi 23 ‘NVIDIA Killer’ GPU Rumored to Support Hardware Ray Tracing, Coming Next Year

- Thread starter Gregster

- Start date

- Status

- Not open for further replies.

More options

Thread starter's postsWithin 5% of the 2080ti

Ballpark 2080ti performance, so -5% or +5% depending on the game.Out of interest where in this do we think the 3070 will fall?

Caporegime

Within 5% of the 2080ti

Yeah, about that.

Its an 8GB card vs 10GB on the 3080, that matters between 1440P and 4K but at 4K the 3080 is 32% faster than the 2080TI

The 3070 has 5888 Shaders, compared with 8704 Shaders on the 3080.

The 3080 has 48% more shaders than the 3070, 2080TI +32% vs +48% shaders there is a deficit there, 16 percentage points. I'm going to put the 3070 between the 2080 Super and 2080TI, 90% a 2080TI.

About 25% faster than a 5700XT, a 40 CU RDNA2 GPU running at 2.23Ghz (6700XT) would trade blows with a 3070.

Is that at 4k or the 1440p you posted? I can see that for 4k.I'm going to put the 3070 between the 2080 Super and 2080TI, 90% a 2080TI

Agree. The only thing AMD can possibly mess up when competing with the 3070 will be price.About 25% faster than a 5700XT, a 40 CU RDNA2 GPU running at 2.23Ghz (6700XT) would trade blows with a 3070.

Associate

Big Navi the 80cu one 10% quicker than the 2080ti for £600 I reckon.

Nvidia will counter with a 16GB 3070ti for the same price.

Nvidia will counter with a 16GB 3070ti for the same price.

Soldato

Big Navi the 80cu one 10% quicker than the 2080ti for £600 I reckon.

Nvidia will counter with a 16GB 3070ti for the same price.

I can't imagine a world where a card with double the CU count (not to mention any IPC changes and/or clock speed bumps) would only gain ~40-45% over the 5700XT. I just don't see how that would be anything but a complete failure.

I do think it's genuinely bizzare how people seem to think AMD have spent two years targeting the performance metric of a 2 year old, previously released card and not the one they are releasing to actually compete with.

There's having intention and actually pulling it off. Where were you making this argument when Polaris could only match nvidia midrange GPUs. Or when Vega 64 fell massively short. Radeon VII was supposed to be Vega done right.

Those threads were also full of a lot of hype. 5700 XT was supposed to be a top tier card in the discussion threads and that also left people crying after it turned out to be a 2070 competitor.

I do think it's genuinely bizzare how people seem to think AMD have spent two years targeting the performance metric of a 2 year old, previously released card and not the one they are releasing to actually compete with.

Amd has had a bad reputation from Tonga/Fiji to Polaris/Vega/Radeon VII, there was little innovation in Gcn, and Nvidia were superior for many years.

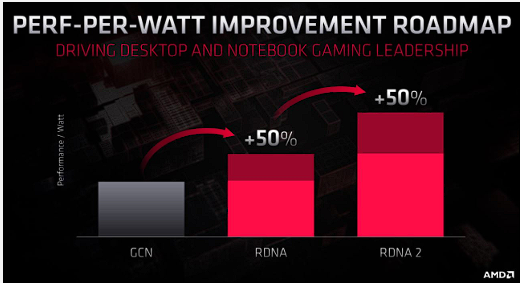

It's not until Navi was developed that Amd gained back around 50-60% perf/watt, and were able to compete with mid range Turing.

With the 7nm node and Navi2 there is def' potential this time round.

If you viewpoint is based purely on Navi, then yes ok, but don't act suprised that people aren't convinced due to Amd's history pre Navi.

Associate

- Joined

- 19 Jun 2017

- Posts

- 1,029

Commercial suicide? WTF?

Do you know how many cards AMD would sell if this was available this monday?

In large quantatity.

Millions. With a 350-360mm2 die.?

That would be commerical gold mine.

Sadly we got to wait till OCT 28 to see that, and then probably face a paperlaunch.

Oh let it not be true....

Yes it potentially can be. Folks tend to ignore the Intel Xe.. AMD would be making higher margins but market share may take a hit if they don't have a flagship by Xe"s launch

Only to people who completely ignored AMD saying they were not going to compete with the top end that release cycle.. 5700 XT was supposed to be a top tier card in the discussion threads

Where were you making this argument when Polaris could only match nvidia midrange GPUs. Or when Vega 64 fell massively short. Radeon VII was supposed to be Vega done right.

None of those releases were competing against two year old cards. This cycle has explicitly been Vs not Turing but Ampere and includes the high end. If they miss that, then they miss it, but they won't be aiming for 2080ti performance.

Different aims and entirely different circumstances. I'm not saying AMD will destroy Nvidia, I'm saying that they won't be targeting the performance of a 2 year old card, and it's weird to think they would.If you viewpoint is based purely on Navi, then yes ok, but don't act suprised that people aren't convinced due to Amd's history pre Navi.

That would be commercial suicide from AMD.

Exactly, a card that sits between the 3070 and 3080 performance, for close to 3080 money, without the features and drivers Nvidia bring to the table, IS commercial suicide. Every pc gaming friend I've made over the last 20+ years, would not take that offering from AMD. It just makes no sense from a buyer's perspective, why not just pay a fraction more for a card that performs better and is highly likely to have the more stable drivers AND offers extras like DLSS etc etc ...

AMD won't succeed by just matching Nvidia with price and performance, not when they lack in other areas. They need to be same performance for less, or greater performance for same money. Nothing other than those two options will convince buyers to miss out on Nvidia stability and feature set.

Big Navi the 80cu one 10% quicker than the 2080ti for £600 I reckon.

Nvidia will counter with a 16GB 3070ti for the same price.

I can't imagine a world where a card with double the CU count (not to mention any IPC changes and/or clock speed bumps) would only gain ~40-45% over the 5700XT. I just don't see how that would be anything but a complete failure.

AMD has released official statement that RDNA 2 is (over) 50% faster at the same TDP.

If RX 5700 XT is a 200-watt card, to achieve RTX 2080 Ti + 10%, you would need to actually lower the TDP of the Navi 10 replacement in order to get that end performance.

Navi 21 with 300-watt power consumption/TDP will have both 50% higher power budget and 50-60% higher IPC.

https://videocardz.com/newz/amd-promises-rdna-2-navi-2x-late-2020-confirms-rdna-3-navi-3x

AMD has released official statement that RDNA 2 is (over) 50% faster at the same TDP.

If RX 5700 XT is a 200-watt card, to achieve RTX 2080 Ti + 10%, you would need to actually lower the TDP of the Navi 10 replacement in order to get that end performance.

Navi 21 with 300-watt power consumption/TDP will have both 50% higher power budget and 50-60% higher IPC.

https://videocardz.com/newz/amd-promises-rdna-2-navi-2x-late-2020-confirms-rdna-3-navi-3x

AMD have released many false statements over the years.

Vega being an "overclockers dream" is one example...

Don't believe anything until you see official reviews.

I'm pretty simple. AMD fanboys keep shifting the goalposts of what they want from AMD to fit what they think they'll deliver.

I want a card which is faster than the 3080 with more VRAM and some form of raytracing capability which works with the current RT games and moving forwards.

If they can't match the speed but offer more VRAM than the 3080, then the AMD card is realistically going to become outdated at the same time or even sooner than the 3080.

In terms of features, NVIDIA are simply the king. They have their entire NVIDIA freestyle with custom reshade presets, their own sharpening filter which applies to more games, DLSS, VRSS, RTX which works when used and a lot of games coming out where NVIDIA have clearly worked with the developers to make them fully capable with RTX. They also have better VR performance than AMD GPUs, and seem to have the edge on drive support recently (but YMMV). They also need to have HDMI 2.1x2 ports available on their GPUs and support 4:4:4 10-bit 4k, 120hz and not lock it to 8 or 12-bit (NVIDIA now support 10-bit).

AMD really need to bring raw performance and rasterisation. I don't care about power to a degree and efficiency. I care about the FPS and resolution I'll be able to target.

If AMD can't do this, then in my mind sadly they'll probably forever be the budget GPU manufacturer who give great bang for buck but don't have a clue at the high end with the best value CPUs on the market.

Both AMD and NVIDIA lie a lot so I don't trust too much of either's hype marketting. We're on a strange time in GPU land where NVIDIA gimped their cards with VRAM and don't seem to have a technoligical advancement this year but instead are just pushing the cards with power.

AMD on the otherhand have historically over the past 2-4 years been non-existent at the high end of gaming. No timely 1080ti or 2080ti competitor.

Basically AMD have their homework. Hopefully they've managed it but I think a few of us are holding onto hope AMD better NVIDIA based on some of the console-hype presentations from Sony, MS and AMD. Historically all 3 of them are pretty filthy liars when it comes to the "power" they harness.. so lets see.

I want a card which is faster than the 3080 with more VRAM and some form of raytracing capability which works with the current RT games and moving forwards.

If they can't match the speed but offer more VRAM than the 3080, then the AMD card is realistically going to become outdated at the same time or even sooner than the 3080.

In terms of features, NVIDIA are simply the king. They have their entire NVIDIA freestyle with custom reshade presets, their own sharpening filter which applies to more games, DLSS, VRSS, RTX which works when used and a lot of games coming out where NVIDIA have clearly worked with the developers to make them fully capable with RTX. They also have better VR performance than AMD GPUs, and seem to have the edge on drive support recently (but YMMV). They also need to have HDMI 2.1x2 ports available on their GPUs and support 4:4:4 10-bit 4k, 120hz and not lock it to 8 or 12-bit (NVIDIA now support 10-bit).

AMD really need to bring raw performance and rasterisation. I don't care about power to a degree and efficiency. I care about the FPS and resolution I'll be able to target.

If AMD can't do this, then in my mind sadly they'll probably forever be the budget GPU manufacturer who give great bang for buck but don't have a clue at the high end with the best value CPUs on the market.

Both AMD and NVIDIA lie a lot so I don't trust too much of either's hype marketting. We're on a strange time in GPU land where NVIDIA gimped their cards with VRAM and don't seem to have a technoligical advancement this year but instead are just pushing the cards with power.

AMD on the otherhand have historically over the past 2-4 years been non-existent at the high end of gaming. No timely 1080ti or 2080ti competitor.

Basically AMD have their homework. Hopefully they've managed it but I think a few of us are holding onto hope AMD better NVIDIA based on some of the console-hype presentations from Sony, MS and AMD. Historically all 3 of them are pretty filthy liars when it comes to the "power" they harness.. so lets see.

AMD have released many false statements over the years.

Vega being an "overclockers dream" is one example...

Don't believe anything until you see official reviews.

No, it doesn't work like that.

RTX 2080 Ti + 10% for £600 when there is a RTX 3080 ~ RTX 2080 Ti + 30% for £600 doesn't add up and will never happen.

- Status

- Not open for further replies.