Soldato

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

10GB vram enough for the 3080? Discuss..

- Thread starter Perfect_Chaos

- Start date

- Status

- Not open for further replies.

More options

Thread starter's postsAssociate

Time for a new career...........

No way... The 6800XT is perfect for my game development needs.

It's a beast and does everything I want.

Soldato

No way... The 6800XT is perfect for my game development needs.

It's a beast and does everything I want.

Associate

What's that about? Your Zotac 3080 OC is not better than my 6800XT Nitro Special Edition. If you offered me a swap I'd refuse.

My rig is better than yours.

Deleted member 651465

D

Deleted member 651465

Guys, can I remind you that the graphics card subforum carries a strike system.

Please keep the discussion on topic and let's not resort to petty bickering. Thanks.

Please keep the discussion on topic and let's not resort to petty bickering. Thanks.

Permabanned

What statement? You would literally have to pay me to play Control. A game needs more than flashy effects to be "game of the year" in my opinion. The story of Control appears to be non-existent.

Maybe I'll give CP2077 a try when they get it to a state to where it should have been at release. But my jank tolerance is very low so that may take awhile

Some of the most fun games are from indies and small studios. Not huge generic AAA+ games.

So you have not played it and it "appears" not to have a story so you are repeating that as a fact? It's a great game with a genuinely interesting story with loads of secrets to piece together. Theres a good reason so many people give it their shout for game of the year. If you dont fancy it thats cool but to say theres no story when you know nothing about it is just weird to be honest.

So you have not played it and it "appears" not to have a story so you are repeating that as a fact? It's a great game with a genuinely interesting story with loads of secrets to piece together. Theres a good reason so many people give it their shout for game of the year. If you dont fancy it thats cool but to say theres no story when you know nothing about it is just weird to be honest.

Agreed, can’t take anything from someone like that seriously. I’d hate to see what sort of game he develops

Staying on topic, didn’t have any VRAM issues with Control. 1440p, max everything including RT and it was a flawless experience. Did some tests with DLSS on and off but didn’t notice a drop in quality - great FPS boost though so I left it on as I have a 165Hz monitor.

Permabanned

Agreed, can’t take anything from someone like that seriously. I’d hate to see what sort of game he develops

Staying on topic, didn’t have any VRAM issues with Control. 1440p, max everything including RT and it was a flawless experience. Did some tests with DLSS on and off but didn’t notice a drop in quality - great FPS boost though so I left it on as I have a 165Hz monitor.

Im playing it now in 4k and I can see its noticeably sharper with DLSS off at native 4k but the performance increase is worth having it switched on. The effects are impressive when things start flying but the style and art direction are the most impressive part for me. Walking into a new area and the writing appearing on the screen or the mysterious shadow of the dude talking projecting onto the area you are in are super immersive story telling.

Exactly, the RTX3080 is a card designed for 1080p

hehe, i definitely wouldn't say that.

Associate

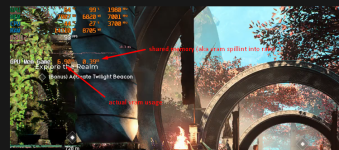

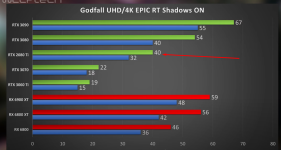

i debunked the myth of allocation:

https://www.youtube.com/watch?v=sJ_3cqNh-Ag

allocated vram shows as 7.7 gb and "dedicated usage" shows as 6.8 gb. yet vram related FPS drop happens anyways, and hugely. you would expect that with "real 6.8 gb" vram usage, game would not tank the performance. yet, it does

(7.7 gb is allocated for GODFALL alone, according to afterburner)

if it's really allocated in the terms of "empty vram", why does the vram spill to ram and tanks the performance?

there are something different going on here with this vram debacle. after this incident, i stopped trusting the dedicated vram value, because it had no meaning or whatsoever for this game

this game also debunks the myth that "gpu performance becomes bottleneck before vram is filled".

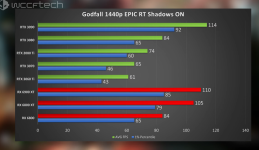

rtx 3070 can run this game at 1440p 50-60 fps ultra with ray tracing enabled. but once vram goes overboard, performance tanks to 30s. this is a huge performance deficit.

i tried lowering everything to medium and it still didn't help. it simply couldn't handle both 1440p and ray tracing in the same time

this benchmark is done by disabling everything in the background. no discord, no chrome. no extra vram consuming apps.

with discord enabled, even at 1080p you get frame drops.

i would like someone to test with a pretty normal pc config that have discord open in the background with 10-15 servers, geforce experience, and try running the godfall at 4k ultra with rt on. i bet it will experience same frame drops

Not sure what myth you're talking about, specifically which part of memory allocation is a myth?

I'd question why frame rate drops are happening in Godfall specifically, in general that's quite a low FPS to start with and I expect that's because Ray Tracing is enabled going by the menu options. That's going to be tough to run at 1440p on a 3070. Games can exhibit frame rate drops for any number of reasons, they don't have to be related to vRAM usage and I see no specific reason to believe it's vRAM related in this case, other than it potentially could be.

Bottlenecks are a complicated issue because each game has it's own unique set of demands on hardware so bottlenecks are not just dependent on hardware configuration but also what the software demands of that hardware. When we talk about GPU designers making video cards we talk about them designing the hardware to meet the demands of a market in general, they don't make hardware to cover all plausible edge cases, so the discussion of things like bottlenecks is a very general one, in which you'd expect some exceptions. The more general point is that, as vRAM usage goes up so does the demand on the GPU. That's a rule that's broadly true and among the typical games we see today. And video cards are designed to have components which are balanced in such a way as that no significant bottleneck exists when running these games. A specific GPU is only going to reasonably be able to make use of a certain amount of vRAM and the hardware is targeted at that to keep efficiency up, bottlenecks down, and price down.

I don't have Godfall so I can't test myself, but none of these tests really highlight vRAM specifically as the culprit. Just from experience alone we know that 1440p is a very hard resolution to run at with any kind of RT enabled at least not without DLSS. RT is run primarily from fixed function hardware and there's a limited amount of that on the GPU itself, and it's easy to overload that dedicated hardware by pushing the number of rays too high. How many rays you're shooting into the scene is completely developer dependent. It would be interesting to see some side by side tests that lowers vRAM requirements like with say less texture detail and see when vRAM requirements are lower, how the allocated vs used changes, and what performance impact that has.

Not sure what myth you're talking about, specifically which part of memory allocation is a myth?

I'd question why frame rate drops are happening in Godfall specifically, in general that's quite a low FPS to start with and I expect that's because Ray Tracing is enabled going by the menu options. That's going to be tough to run at 1440p on a 3070. Games can exhibit frame rate drops for any number of reasons, they don't have to be related to vRAM usage and I see no specific reason to believe it's vRAM related in this case, other than it potentially could be.

Bottlenecks are a complicated issue because each game has it's own unique set of demands on hardware so bottlenecks are not just dependent on hardware configuration but also what the software demands of that hardware. When we talk about GPU designers making video cards we talk about them designing the hardware to meet the demands of a market in general, they don't make hardware to cover all plausible edge cases, so the discussion of things like bottlenecks is a very general one, in which you'd expect some exceptions. The more general point is that, as vRAM usage goes up so does the demand on the GPU. That's a rule that's broadly true and among the typical games we see today. And video cards are designed to have components which are balanced in such a way as that no significant bottleneck exists when running these games. A specific GPU is only going to reasonably be able to make use of a certain amount of vRAM and the hardware is targeted at that to keep efficiency up, bottlenecks down, and price down.

I don't have Godfall so I can't test myself, but none of these tests really highlight vRAM specifically as the culprit. Just from experience alone we know that 1440p is a very hard resolution to run at with any kind of RT enabled at least not without DLSS. RT is run primarily from fixed function hardware and there's a limited amount of that on the GPU itself, and it's easy to overload that dedicated hardware by pushing the number of rays too high. How many rays you're shooting into the scene is completely developer dependent. It would be interesting to see some side by side tests that lowers vRAM requirements like with say less texture detail and see when vRAM requirements are lower, how the allocated vs used changes, and what performance impact that has.

i implore you watch the video again. with more observant eyes.

observe shared memory. you will understand that framedrops occur because game spills vram data into ram and this is known to cause framedrops (please watch the video again while obserivng shared memory usage)

this game's ray tracing is light. but it uses a lot of vram

https://www.youtube.com/watch?v=sJ_3cqNh-Ag

you should literally observe that game "hard" stutters when shared memory usage increases. it is clear that at that moment game stutters because it starts to use shared memory pool. and at that point fps gets lowered.

vram is the culprit here. i'm %100 sure.

i get 80 fps in the same place at 1080p with same settings. you really think going from 1080p to 1440p cause fps to drop from 80 to 30?

i would say, unlikely.

Still waiting to encounter issues with 10gb here..... 4k and 3440x1440 gaming with max settings is lovely.

Also lol at people comparing godfall to likes of cyberpunk and control, godfall is crap looking in comparison to them games.

VRAM aside - a 3080 isn't fast enough for 4k max settings in all new games, at 60+ fps. The 3080ti will be a welcome upgrade, assuming it has a good 15% more performance.

Also, I don't consider DLSS to be worth using in the vast majority of games, as it makes the image look a blurry mess. The only games I'd ever consider using it, would be a really fast paced competitive shooter, where fps matters above all.

as i've said again, rtx 3070 is capable of putting out 60+ fps at 1440p with rt enabled in this game. this benchmark does not show the issue. why? because it's a benchmark. in actual gameplay, you can see the vram related fps drops happen after 15 minutes of gameplay. so what, nvidia expects me to exit and enter the game every 10 15 minutes or so?

at 4k, this issue already exhibits itself natively, even at benchmarks.

i can, if i want to, force the issue at 1440p with benchmark with discord enabled in the background.

but i already deleted the horrible game so no i wont be doing any tests or whatsoever. you may believe or not. i simply couldnt play at 1440p with ray tracing enabled, not because of 3070's power, but because of its vram deficit

You can "facepalm" all you want, quake 2 is creeping up on 25 years old ffs.

Not bad for a ~25 year old FPS and yes, I'm a @Rroff fan -

As mentioned he stuck near 2 million tris in one test and there was virtual no slowdown - though there is more to it than that but at least as far as geometric complexity goes the renderer is untroubled by significantly higher levels of detail than the Quake 2 stock maps.

This one shows 1.2million in use:

In this case he is just using one material on most surfaces (though it does have all stages in use) to simulate load and doesn't have complex use of materials involving caustics, etc. but still.

People massively underestimate the capabilities of the renderer in Quake 2 RTX and/or still assume it is using optimisations like Minecraft to work due to the simple nature of Quake 2's maps when it isn't.

Game doesn't have bullet impacts as standard - I've not got around to adding bullet impacts that render correctly with RTX yet so it is a bit ugly but will suffice to demonstrate

One of the things that really stands out for me as well is the reflected scattered light

(Ignore the shell casings are floating and an old model with 8bit colour skins and not using RTX materials - I'm still very much prototyping)

The nice thing is you aren't limited to planes, etc. any object can have real time, accurate, reflections from all angles, etc. (though there is a limit currently on reflections of objects with refraction/reflections and/or multiple levels of transparency).

If you look at my test room screenshots (example below) it has large areas of glass on both sides, a large mirror and multiple glass, chrome and other reflective objects and is only 1.5% slower than the stock Quake 2 maps if that.

Typically the performance cost of rendering a scene in CP2077 with rasterisation maxed and RT maxed exceeds the impact it would have of rendering it via Quake 2's path tracer albeit the cost here comes at the constrained ray budget not being sufficient to remove noise from specular lighting to an ideal level.

EDIT: You can adjust the reflection/refraction bounces between 1 and 8, default 2, in Quake 2 RTX with only a moderate performance hit - obviously there is a point you don't get things rendered that would require more bounces to capture after a point hence the limited performance impact of increased scene complexity but there are few instances where that matters for a video game even though it would be nice to have higher.

It has its limits and there in some way to go yet to get ideal results with an ideal low level of visual noise from using denoising approaches to make up for limited ray budgets but the path tracer in Quake 2 RTX is much more than people give it credit for because they judge it from the stock Quake 2 maps.

Only Quake 2 RTX has a proper path tracing implementation of any sort which is fully used for all rendering (albeit even that isn't without some cheats*) - all the other games use a combination of some or all of using ray tracing only or partially in screen space, using simplified re-renders of the scene often missing a lot of details and often captured at a far lower resolution. In CP2077 for instance a small amount of ray tracing is used as a reference for scattered light and only from a limited number of sources (primarily the sun) and then the rest faked up using traditional techniques referenced against the smaller number of ray traced samples (I'm overly simplifying the system they use). A lot of the shadows are generic imposters projected into the scene rather than using proper ray tracing as well again with a limited amount of ray tracing used to guide the results. The sad thing is in many cases just getting a lite version of those features running still has a good slice of the performance overhead of a more complete solution for ray tracing the whole scene but is the minimum implementation required just to get some small level of the features in use.

Quake 2 RTX still has many compromises - especially the ray budget right now just doesn't allow for denoising results to an optimal level - but it is far far more capable than people who haven't spent time experimenting with it allow for and can significantly exceed the visual results of traditional techniques even though it can't hit the heights of offline ray tracers yet and is no way limited to the Quake 2 engine for results. We still need ideally 2-4x the ray budget to be able to satisfactorily remove noise from specular lighting, etc. despite the claims of some there is no reason why it can't be used to render a game like CP2077 with viable performance.

* For instance specular lighting is only fully path traced out to medium distance - the specular lighting on far objects uses a fast approximate cheat which is mostly indistinguishable to the real thing though not 100%. Caustics are only partially implemented (for time rather than performance as the stock maps don't really have features that would use it) and use fast approximate simulation which can look quite fake in certain scenarios (but again the developer never spent any time on it).

EDIT:

Turned off the sun light to show all the windows, etc. are doing reflections, etc.:

With lots of reflections and refraction, etc. as well as other features going on there isn't a huge performance difference to a standard map this holds true for even more complex scenes as well - for LOLs ran it at 4k on my 3070FE - 1440p is more where playable performance is at:

I've been playing quite extensively with the Quake 2 RTX implementation and working with a group that is expanding on its abilities (nail and crescent*) so I do have some idea.

* They've still got a long way to go but making some decent headway on expanding the Quake 2 RTX renderer https://www.youtube.com/watch?v=BIOJ6QURT5k

Did you play Godfall? Some would say it's a more fun game than ConTroll.

A video is better than a pic: https://youtu.be/7QwdbyVEJhY?t=216

Mind you this was taken 6 months ago so the performance has probably improved since then.

No, not touching the Epic store due to it's console like approch to exclusivity. I asked if you had some pics showing RT GI and reflections. The video doesn't look as though it has RT.

Soldato

You've really got a chip on your shoulder about games consoles?!No, not touching the Epic store due to it's console like approch to exclusivity. I asked if you had some pics showing RT GI and reflections. The video doesn't look as though it has RT.

I'm a developer. Having 16gb VRAM is very nice. 8/10GB VRAM when dealing with raytracing in Unreal engine is not enough. 3090 was out of my budget.

This makes no sense to me at all. Sure some plugins require full levels to be loaded when baking maps for rasterisation, but breaking 8GB takes real effort in poor level optimisation, pre UE4 for Dummies level.

A quick search on google turned up https://www.pugetsystems.com/labs/a...5---NVIDIA-GeForce-RTX-3080-Performance-1876/. Looks like a decent read covering -

- Introduction

- Test Setup

- Overall Unreal Engine Performance Analysis

- Virtual Studio

- Megascans Abandoned Apartment

- Megascans Goddess Temple

- ArchViz Interior

- How well does the NVIDIA GeForce RTX 3080 perform in Unreal Engine?

While it is a bit odd that the RTX 3080 has less VRAM than the 2080 Ti, all these new cards should provide a significant performance boost in Unreal Engine. The RTX 3080 and 3090 (with 10GB and 24GB of VRAM respectively) should also have no trouble with 4K even with advanced ray tracing options enabled.

Since only the RTX 3080 is fully launched at this point (the 3090 is set to launch on Sept 24th, and the 3070 sometime in October), we unfortunately will only be able to examine the 3080 at this time. However, we are very interested in how the RTX 3070 and 3090 will perform, and when we are able to test those cards we will post follow-up articles with the results.

Perhaps you would like to show some of your work in UE4 and where you are having VRAM issues?

You've really got a chip on your shoulder about games consoles?!

Not really, no. They have a fantastic price / performance ratio. Would I take one over a PC? No.

- Status

- Not open for further replies.