@ChrisD. Talk to me about Host Cache, VMDK cache etc. I'm trying to work out where is best to utilize my 6TB worth of NVME. Can they be used to boost performance on disk operations back to for example exchange, effectively acting as a flash back write cache to datastores or are they more general use across the whole host? How would you utilize them? I plan on running a few quick tests on some servers to see the impact of our san over something more modern but after that i'm not sure if they offer any significant performance advantages? Also cant answer on the why the options are not there on other hosts, all have v15 VM hardware version, all have esxi 6.7 U2. Could be EVC so will check that!

Basically trying to work out if i just fill the servers with NVME, set it up in some kind of raid and expose to esxi as some local test datastores on each host, its possible then to use that storage for snapshots but seems a waste using it for only that. My preference would be aiming for performance improvements over extended storage that is not shared between hosts.

I did my own looking last night and inspected the performance best practice guides for 6.5 and noticed talk of vFRC (page 32 of the guide) which suggests that significant disk performance improvements can be made by adding a virtual flash infrastructure layer, this all sounds very interesting but in practice what works well and what doesn't?

Oh and one more thing, if I did expose a flash infrastructure layer that was local is there then any impact on DRS and vMotion? So many questions!

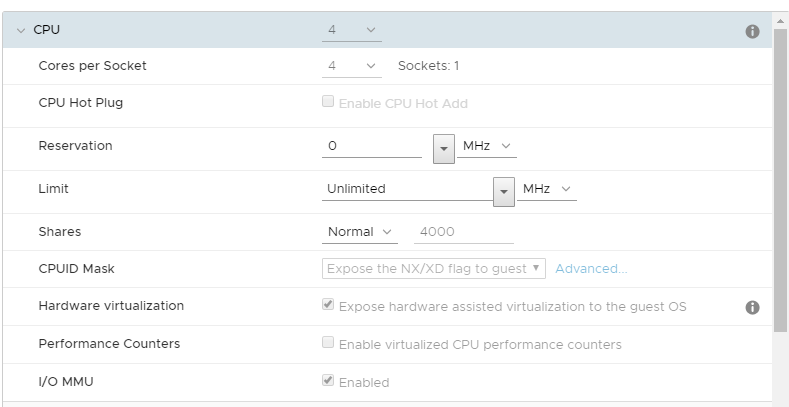

In terms of those options, everything from CPU mask down exists when editing VM's on my EPYC hosts but not on my Intel hosts. I don't necessarily think these options are EPYC specific though? Perhaps they only exist on newer chips?

In terms of those options, everything from CPU mask down exists when editing VM's on my EPYC hosts but not on my Intel hosts. I don't necessarily think these options are EPYC specific though? Perhaps they only exist on newer chips?