Soldato

Jayztwocents did a standard Timespy test enabling me to update my chart.

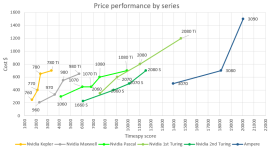

The pricing is off with this gen I think. Turing, Pascal and Maxwell all had fairly linear pricing. However with Ampere the 3080 offers significantly more performance but not at a huge price differential, skewing it over to the right of the chart. Then, oddly, for a huge price jump up to the 3090 you get barely any performance gain. Obviously talking about RRP here as well.

Based on this, the 3080 is either too fast or too cheap.

If you put the 2080Ti in the $700-$800 range where it should have been all along, the pricing and performance steady.

It's not that the 3080 is out of place. It's the 2080Ti (and the non-super Turing stuff). I think the 3090 is priced where it is to capture some of the people that bought into the 2080Ti's offering over the 1080Ti. If people are willing to pay a lot more money for a small performance bump, it makes sense to cash in again.

Turing was a rip-off.

Last edited: