Associate

- Joined

- 14 Apr 2014

- Posts

- 598

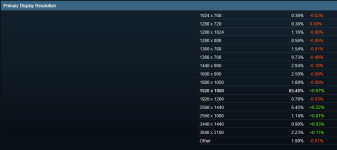

I think this coming generation will be the last of a painful run off the back of Turing being the worst in history of accelerated graphics. My rationale is simple once we are over the 4k hump with decent framerates you can add all the bells and whistled you like but the demand drops right off for the high-end over priced products... or to put it another way you'll be able to comfortably sit on a product for 4yrs+ and I think you'll see more people do that. I personally look to upgrade when I see double the performance on offer at a price I'm willing to spend... I would have bought a 2080Ti at £700 2yrs ago but that was not to be but that is in the realm of the possible later this year.

You have to realise 4K is is extremely far off from being the standard and demand will still be high for high end products because by the time 4K is the standard people who are currently buying 2080Ti's and 3080Ti's will want to be gaming at 8-16k

.

.